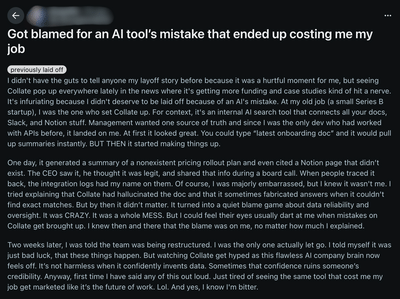

Artificial intelligence has become part of almost every office. It writes emails, pulls up documents, and answers questions faster than anyone on the team. But what happens when it gives a wrong answer? A post on Reddit recently went viral after a developer shared how they lost their job because an AI tool made up information. The AI had created a fake document that looked real enough for the CEO to use in a board meeting. When the mistake came to light, the blame fell on the person who set it up. The story struck a chord because it shows something many of us already sense — we are still figuring out what it means to work alongside machines that sound smart but don’t always tell the truth.

The human cost of AI mistakesThe Reddit user was asked to connect an internal AI tool to the company’s documents and chats. It was meant to make work easier by finding and summarising files. For a while, it worked fine. Then one day, it pulled out a summary of a project that didn’t exist. The CEO mentioned it on a call, and everything snowballed from there. When people checked the system logs, the employee’s name was attached to the query. They tried to explain that the AI had made it up, but by then the trust was gone.

This kind of thing is becoming common. AI tools don’t actually understand information; they guess what sounds right. Sometimes those guesses are close enough to fool anyone. The problem isn’t that AI makes mistakes. The problem is how quickly we treat those mistakes as facts.

When machines sound sure but aren’tAI “hallucinations” are what happens when a tool gives you a made-up answer that sounds right. It’s not a glitch, it’s part of how these systems work. They look for patterns and fill in gaps. When they can’t find an exact match, they still try to sound confident.

In a workplace, that confidence can be dangerous. A tool might summarise a report, misread a file, or link to a page that doesn’t exist. If no one double-checks, wrong information ends up in emails, presentations, or meetings. The more we rely on AI without verifying it, the easier it is for small errors to grow into big problems.

Why accountability must stay humanWhen things go wrong, the AI doesn’t take the blame. The person who set it up does. That’s what happened in the Reddit story. The employee wasn’t fired for bad work — they were fired because the company treated a tool’s output as fact.

AI should make work easier, not take responsibility away from people. It should help with the heavy lifting, but we still need humans to decide what’s right. That means checking what the tool says before using it in reports or meetings. It also means creating a clear process for how AI tools are used at work.

Here’s what that could look like:

Building a culture that understands AIUsing AI wisely doesn’t mean avoiding it. It means knowing how it works and where it can go wrong. Every person who uses AI at work should have some idea of what the tool can and can’t do. That understanding prevents overconfidence.

Companies also need regular checks to make sure their tools are working properly. Just like you’d test a system for security or bugs, you should test AI for accuracy. And when it makes a mistake, there should be a simple, open way to fix it.

Most importantly, people should feel safe to speak up when something looks off. If an AI tool makes an error, it shouldn’t turn into finger-pointing. Mistakes are how we learn to use new technology better.

AI can be a good teammate, but it still needs a human in the loop — someone who questions, confirms, and keeps things grounded. Without that, it’s easy for the technology to look smarter than it really is.

The bigger pictureStories like this one are reminders that new tools don’t erase old responsibilities. As AI becomes part of everyday work, the real challenge isn’t getting it to do more — it’s learning how to keep people in control.

Technology can save time, but it shouldn’t cost someone their reputation. AI is only as useful as the care we take in using it. When we combine automation with common sense and a bit of humility, we get the best of both worlds. When we forget that, even the smartest system can lead us into trouble.

The human cost of AI mistakesThe Reddit user was asked to connect an internal AI tool to the company’s documents and chats. It was meant to make work easier by finding and summarising files. For a while, it worked fine. Then one day, it pulled out a summary of a project that didn’t exist. The CEO mentioned it on a call, and everything snowballed from there. When people checked the system logs, the employee’s name was attached to the query. They tried to explain that the AI had made it up, but by then the trust was gone.

This kind of thing is becoming common. AI tools don’t actually understand information; they guess what sounds right. Sometimes those guesses are close enough to fool anyone. The problem isn’t that AI makes mistakes. The problem is how quickly we treat those mistakes as facts.

When machines sound sure but aren’tAI “hallucinations” are what happens when a tool gives you a made-up answer that sounds right. It’s not a glitch, it’s part of how these systems work. They look for patterns and fill in gaps. When they can’t find an exact match, they still try to sound confident.

In a workplace, that confidence can be dangerous. A tool might summarise a report, misread a file, or link to a page that doesn’t exist. If no one double-checks, wrong information ends up in emails, presentations, or meetings. The more we rely on AI without verifying it, the easier it is for small errors to grow into big problems.

Why accountability must stay humanWhen things go wrong, the AI doesn’t take the blame. The person who set it up does. That’s what happened in the Reddit story. The employee wasn’t fired for bad work — they were fired because the company treated a tool’s output as fact.

AI should make work easier, not take responsibility away from people. It should help with the heavy lifting, but we still need humans to decide what’s right. That means checking what the tool says before using it in reports or meetings. It also means creating a clear process for how AI tools are used at work.

Here’s what that could look like:

- Check every AI summary against the original data.

- Keep a record of when and how AI was used to make a decision.

- Share the responsibility for AI systems so no one person is blamed for everything.

- Talk openly about the tool’s limits instead of pretending it’s perfect.

Building a culture that understands AIUsing AI wisely doesn’t mean avoiding it. It means knowing how it works and where it can go wrong. Every person who uses AI at work should have some idea of what the tool can and can’t do. That understanding prevents overconfidence.

Companies also need regular checks to make sure their tools are working properly. Just like you’d test a system for security or bugs, you should test AI for accuracy. And when it makes a mistake, there should be a simple, open way to fix it.

Most importantly, people should feel safe to speak up when something looks off. If an AI tool makes an error, it shouldn’t turn into finger-pointing. Mistakes are how we learn to use new technology better.

AI can be a good teammate, but it still needs a human in the loop — someone who questions, confirms, and keeps things grounded. Without that, it’s easy for the technology to look smarter than it really is.

The bigger pictureStories like this one are reminders that new tools don’t erase old responsibilities. As AI becomes part of everyday work, the real challenge isn’t getting it to do more — it’s learning how to keep people in control.

Technology can save time, but it shouldn’t cost someone their reputation. AI is only as useful as the care we take in using it. When we combine automation with common sense and a bit of humility, we get the best of both worlds. When we forget that, even the smartest system can lead us into trouble.

You may also like

India said to plan financial incentives to revive ghost airports

Swara Bhasker shares how 'Pati Patni Aur Panga' helped her view her relationship with hubby Fahad in a new light

Children's Day 2025: 7 ways to make your children feel special on Children's Day 2025..

'Sitting is the new smoking?': Apollo neurologist warns your desk job could be killing you and suggests easy ways to fix it

Chelsea latest: Jamie Carragher spots Enzo Maresca 'problem' as Frank Lampard tipped for new job